AI Tech Stack: A Primer

This article offers an overview of the AI space, illustrating the wave of innovation and disruption sweeping across the tech stack.

From a 10,000-foot perspective, the AI foundational layer has seen remarkable advancements, transitioning from basic models like classification, prediction, and recommendation to sophisticated, dense models on the path to Artificial General Intelligence (AGI). This evolution in model capabilities necessitates innovative strides in cloud infrastructure and hardware layers. Concurrently, this shift is disrupting the application and services layers, altering this sector's value chain and business models.

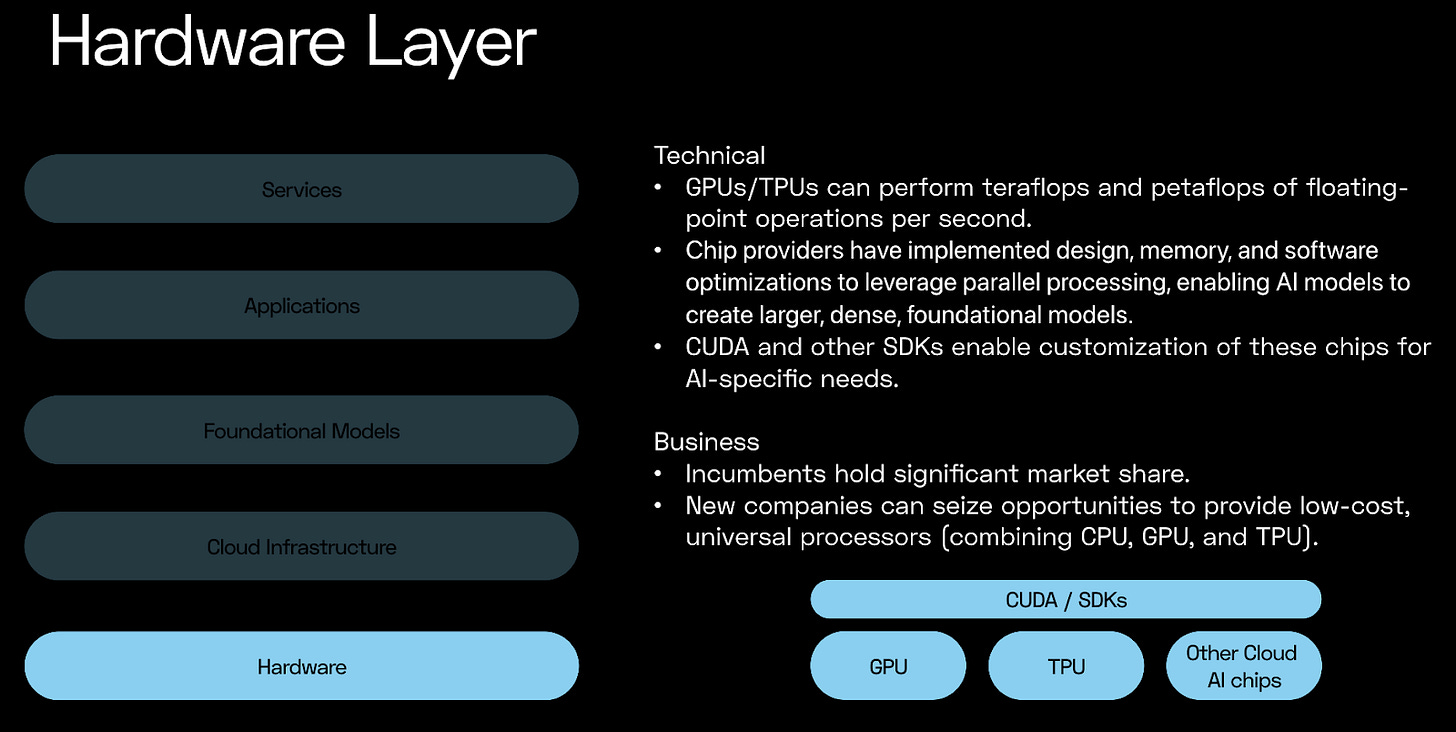

Hardware Layer:

Chip providers in the hardware layer have significantly optimized their computational power, achieving Terraflops (TFLOPS) or trillions of floating-point operations per second, crucial for machine learning training workloads. Cloud providers now deliver over 100 petaflops (PFLOPS) of performance for specific machine-learning tasks. This performance hinges on various factors, including architecture improvements, memory bandwidth enhancements, and compiler optimizations. Chip providers also offer APIs and SDKs, enabling cloud providers, model builders, and application developers to tailor and optimize chip usage for their model training requirements.

From a business perspective, a handful of fabless companies and cloud providers dominate this layer, offering internal, proprietary versions of their technology. However, new companies have the potential to disrupt this space with universal processors (combining CPUs, GPUs, and TPUs) that combine high performance with cost-efficiency. Despite the challenge of penetrating a market dominated by incumbents, these new entrants can make significant inroads by offering innovative solutions.

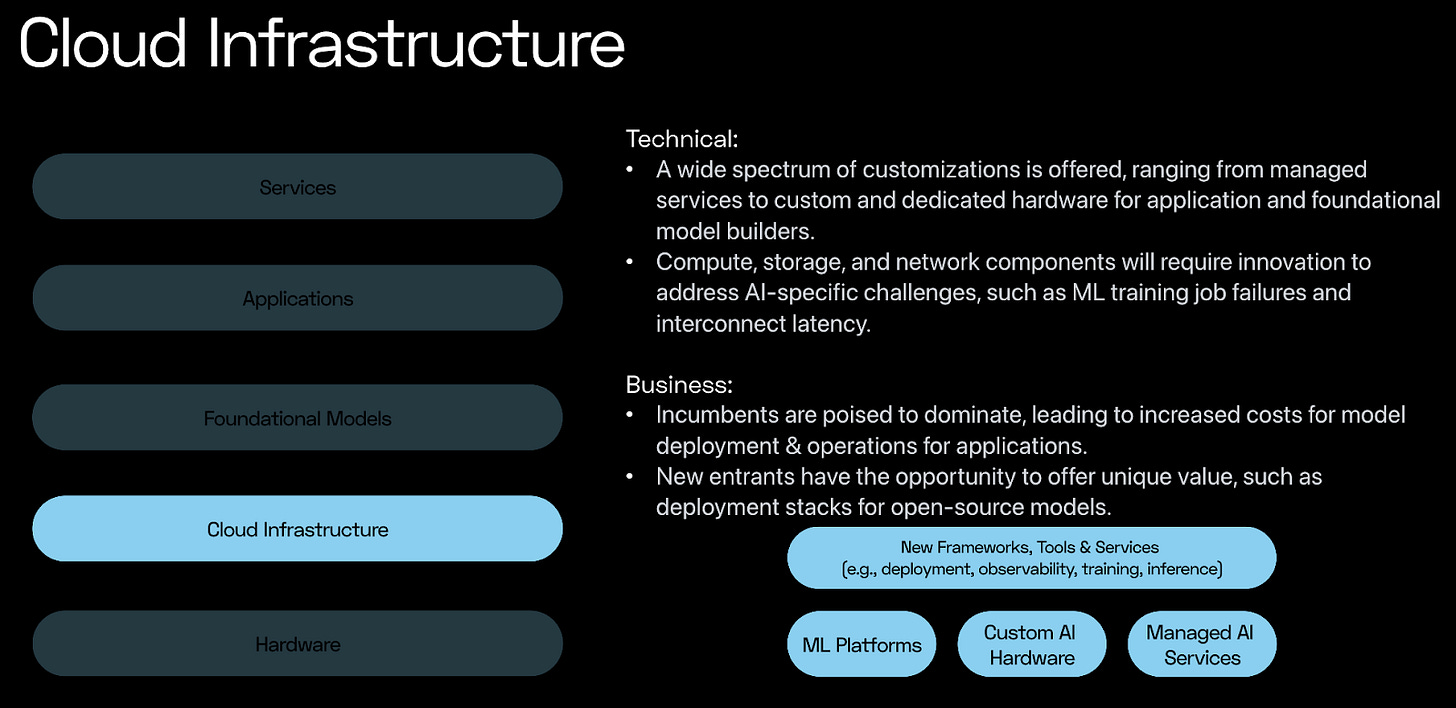

Cloud Infrastructure Layer

Cloud providers are not merely boosting efficiency; they're redesigning their infrastructure to meet the intensive demands of AI-driven applications. This involves

Addressing AI-specific challenges such as mitigating machine learning training job failures, reducing interconnect latency, and managing substantial storage requirements for training jobs.

Offering a broad spectrum of options to model and application builders, ranging from managed services and platform usage to customized and dedicated hardware, tailored for native AI services. However, these choices may lead to increased deployment and operational costs for applications, posing a challenge for sustainable and profitable revenue models.

The cloud infrastructure sector is at a crucial turning point from a business standpoint. Market leaders are reinforcing their positions, potentially leading to higher model deployment and operations costs. Nevertheless, this environment also presents opportunities for startups to challenge the established order. These newcomers can carve out their niche by providing comprehensive solutions (e.g., Replicate for hosting and deploying open source models), differentiating themselves with cost-effective offerings, superior customization experiences, and user-friendly options for application deployment, positioning themselves as the go-to solution among specific customer cohorts.

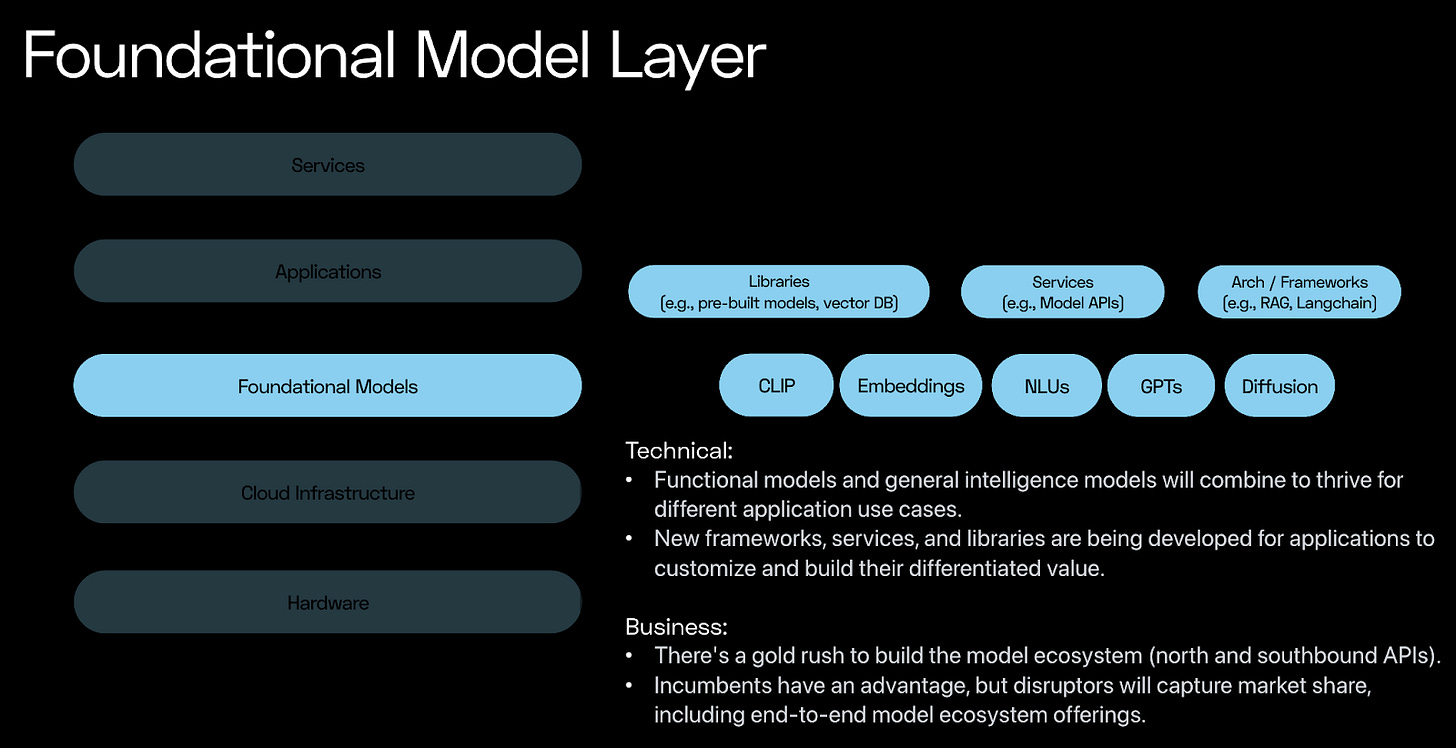

Foundational Model Layer

Foundational models have progressed from basic functionalities like classification, prediction, and recommendation to more complex structures aiming for AGI. This trend is likely to continue, expanding capabilities across multimodality, multitasking, and agentic values. As applications begin to leverage these model capabilities, a combination of functional and large dense models will likely be employed to enhance their offerings.

Additionally, significant developments in the model ecosystem, including new libraries like vector DBs, packaged models, datasets, integration services, and innovative frameworks like Langchain, are supporting these advancements.

From a business perspective, this layer is experiencing intense competition, akin to a gold rush to establish and dominate the model ecosystem, featuring robust northbound (application-facing) and southbound (cloud-facing) APIs. While established players have a natural advantage due to their resources and market presence, the field is ripe for disruption. New entrants are challenging the status quo and strategically positioned to capture significant market share by building comprehensive, end-to-end model ecosystems.

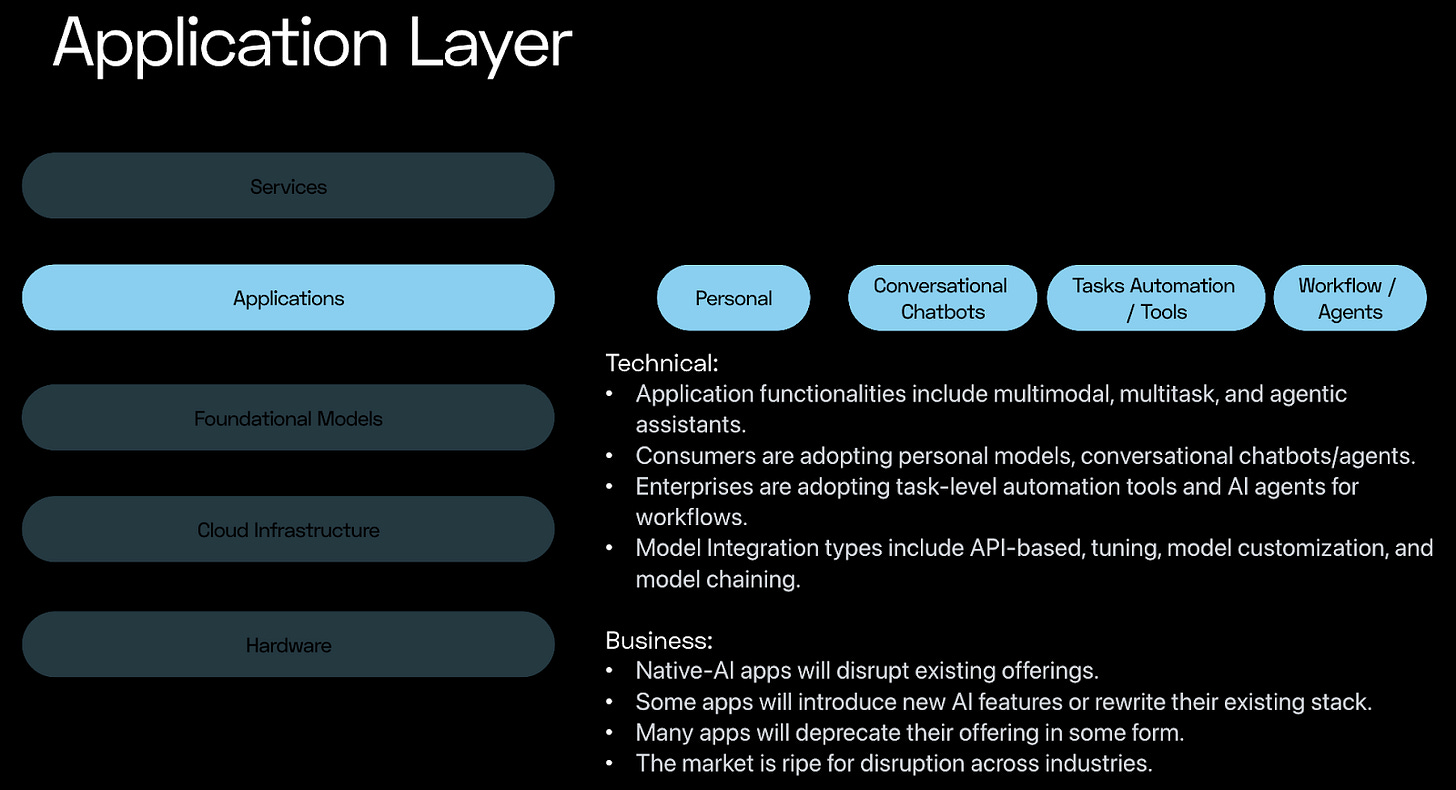

Application Layer

With the evolving foundational model capabilities, the application layer is witnessing a paradigm shift with the rise of multimodal, multitask, and agentic assistants.

On the consumer side, personal models and conversational agents will change the experience layer for many applications, while enterprises will see increased adoption of task-level automation and agentic capabilities for their internal workflows.

Applications now have various options for integrating these models, from API-based solutions to model customization and chaining, each offering a unique path to creating differentiated value and establishing a competitive edge. It is important to think through the integration option before offering new services to customers.

In business terms, this layer is a hotbed for disruption and innovation. The market is primed for a shake-up, offering immense opportunities for both established entities and new players to innovate, adapt, and thrive in this new AI-driven era.

AI-native applications are set to redefine existing market offerings, compelling current applications to adapt by integrating new AI features or even rewriting their tech stacks.

However, this wave of innovation also implies that some existing applications, especially those heavily reliant on outdated processes, may become obsolete.

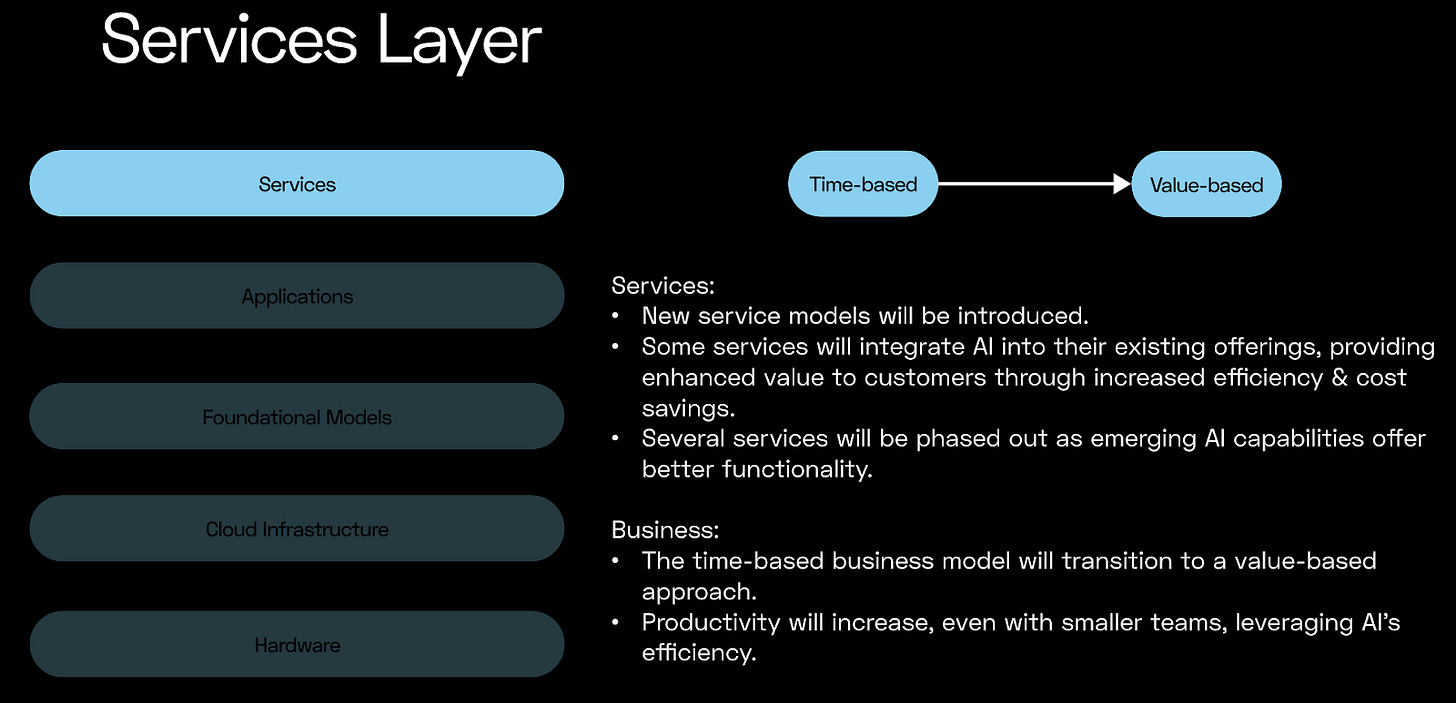

Services Layer

The services layer is embracing AI integration, transforming traditional offerings into more efficient, AI-enhanced solutions. This integration goes beyond mere feature enhancement; it's about fundamentally redefining service value propositions, delivering unprecedented efficiency, and ensuring cost savings. As AI capabilities continue to advance, we're likely to see a significant shift in the service landscape, with some services becoming obsolete and new, more efficient models taking their place.

From a business standpoint, this layer is witnessing a paradigm shift from time-based to value-based business models. The integration of AI is not just improving productivity incrementally; it's redefining it, enabling smaller teams to deliver more by leveraging AI's efficiency. This transition represents a broader shift in business strategy, emphasizing value, efficiency, and innovation as the core drivers of growth and transformation. As this layer continues to evolve, businesses that can adapt to and embrace these new models are likely to thrive in the AI-augmented future.

For more insights or to delve deeper into the AI stack, feel free to ping me or reach out at natarajan.sriram@gmail.com