How Software Engineering is Evolving with LLMs and AI Agents

Software engineering is undergoing a significant transformation, driven by the integration of Language Models trained on code (LLMs) and AI agents designed to automate repeatable tasks. This evolution can be mapped into three phases: the traditional development process, augmentation with LLMs, and the near future with agentic workflows. Let's explore these phases in detail, including the benefits and challenges associated with each phase.

Phase 1: Traditional Developmental Process

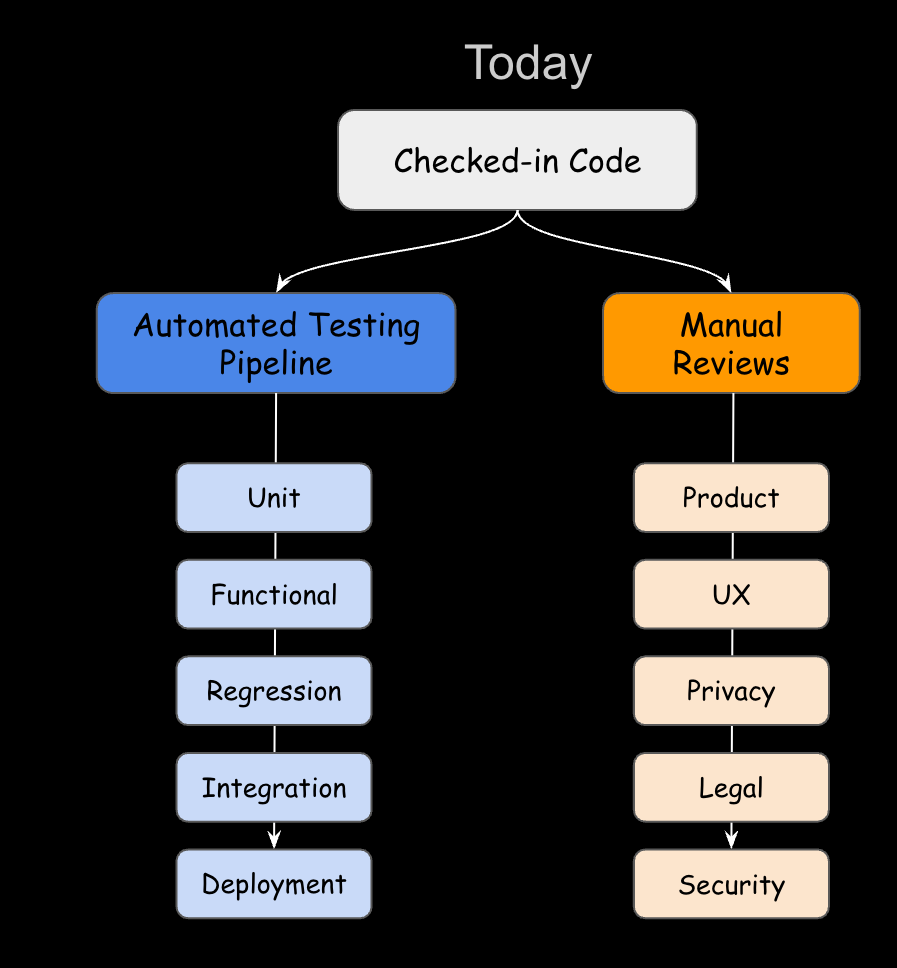

Today, software engineering processes involve a combination of automated pipelines and manual reviews. The diagram below illustrates this dual approach:

Automated Testing/Deployment: When a developer checks in their code, systems trigger the automated Continuous Integration and Deployment (CI/CD) pipelines, which include running unit tests, functional tests, regression tests, and integration tests. Developers then manually iterate over the results and fix any issues.

Manual Reviews: Once the testing and experimentation are complete, the launch process involves several reviews primarily driven by manual, human-driven processes such as product quality review, user experience (UX), privacy, legal, risk compliance, and security.

The current phase benefits from the efficiency and consistency brought by automated testing and deployment processes, significantly enhancing productivity. However, it faces challenges due to the high manual effort required for creating test cases, coordinating between developers, testers, and reviewers, figuring out deployment schedules, and going through multiple reviews to get a green light for launching a product in production.

Phase 2: Augmenting Software Engineering with LLMs (Ongoing)

The second phase introduces LLMs, which bring advanced reasoning capabilities to the software engineering process. Teams across various roles are exploring how to incorporate LLMs into their existing developer ecosystems. This integration is depicted in the following diagram:

LLM Reasoning Capabilities: LLMs are currently assisting with the following tasks using tools like GitHub Copilot, Tabnine, CodeRabbit and Codex. These tools provide API integration to offer the following functionalities:

Code Reviews: Automated reviews of code changes, identifying potential issues and suggesting improvements.

Commit Message Generation: Generating meaningful and contextually appropriate commit messages.

Code Improvement Suggestions: Proposing optimizations and enhancements to improve code quality.

Test Case Generation: Creating relevant and comprehensive test cases based on the code.

Bug Triage and Fixes Suggestions: Identifying bugs and suggesting possible fixes.

Detect Deployment Issues: Predicting and preventing potential issues during deployment.

In this phase, the integration of LLMs enhances code quality through automated reviews and suggestions, reduces the manual burden by handling repetitive tasks, and accelerates development cycles. However, the actual productivity gains are limited as it primarily automates smaller tasks within a workflow that already has significant automation.

It is important to assess if the value justifies the cost and ensure a good return on investment for the company. Additionally, the complexity of integrating LLMs into existing workflows and the accuracy of AI models still require further improvement. Claude 3.5 recently published its evaluation results, highlighting these aspects.

The model works really well for prototypes and explorations. I recently built a memory card game with a single prompt, and it worked without any code changes. However, production-level integrations still require significant effort and refinement.

Phase 3: The Near-Future with Software Agentic Workflows

The third phase introduces AI agents with reasoning capabilities that can handle complex workflows semi-autonomously. The diagram below illustrates this future state:

Assume an agentic workflow that processes test results, wireframes designed by the UX team, demo videos from developers, and a collection of best practices and guideline documents. The workflow can use this information as context to create a review document that can be used as a guide across teams to assess what works well and what needs improvement. This way, the sequential time spent on reviews across teams (Product, UX, Privacy, Legal, Security) can be significantly shortened and optimized, helping everyone stay on the same page.

This phase promises transformative benefits, including significantly faster execution of tasks, reduced workforce requirements, and substantial cost savings. Additionally, the increased automation allows for more experimentation and rapid iteration, leading to enhanced productivity. However, it also poses challenges, such as the complex implementation of even semi-autonomous AI agents that need human supervision and iteration before they can be successfully used in production. A lot can go wrong here, and we need to adhere to ethical standards and privacy regulations while building trust in AI to handle critical tasks reliably.

Conclusion

The integration of LLMs and AI agents in software engineering marks a revolutionary shift. Moving from the current state of mixed automation and manual processes to a future of fully agentic workflows, the industry is poised for unprecedented efficiency, innovation, and productivity. Embracing these advancements will be crucial for companies aiming to stay competitive in the rapidly evolving technological landscape.

I am actively building an open-source version of this Software Agentic Workflow. If you are interested in contributing, please contact me at natarajan.sriram@gmail.com.